#14 Asia AI Policy Monitor - November

AI Safety Institutes meet, N. Korean AI-fueled cyber threats, facial recognition in Australia, India copyright & AI, S.Korea investigates deepfakes, SE Asia power crunch on AI

Thanks for reading this month’s newsletter along with over 1,600 other AI policy professionals across multiple platforms to understand the latest regulations affecting the AI industry in the Asia-Pacific region.

*** Recently, we provided insight about the shortages of labor and energy for AI in Japan for an AFP article. This follows previous media mention of our publication in The Economist where we highlighted the danger of AI anthropomorphization concealing AI harms.***

Do not hesitate to contact our editors if we missed any news on Asia’s AI policy at seth@apacgates.com!

Privacy

The Office for Australian Information Commission (OAIC) published guidance on use of biometric data and facial recognition technology.

As part of this privacy by design approach, it is expected that key principles will be explored to support the appropriate use of sensitive information when using FRT, including:

Necessity and proportionality (APP 3) – personal information for use inFRT must only be collected when it is necessary and proportionate in the circumstances and where the purpose cannot be reasonably achieved by less privacy intrusive means.

Consent and transparency (APP 3 and 5) – individuals need to be proactively provided with sufficient notice and information to allow them to provide meaningful consent to the collection of their information.

Accuracy, bias and discrimination (APP 10) – organisations need to ensure that the biometric information used in FRT is accurate and steps need to be taken to address any risk of bias.

Governance and ongoing assurance (APP 1) – organisations who decide to use FRT need to have clear governance arrangements in place, including privacy risk management practices and policies which are effectively implemented, and ensure that they are regularly reviewed.

The OAIC also found a company in violation of Australian’s privacy rules by collecting and using facial recognition technology.

“We acknowledge the potential for facial recognition technology to help protect against serious issues, such as crime and violent behaviour. However, any possible benefits need to be weighed against the impact on privacy rights, as well as our collective values as a society.

“Facial recognition technology may have been an efficient and cost effective option available to Bunnings at the time in its well-intentioned efforts to address unlawful activity, which included incidents of violence and aggression. However, just because a technology may be helpful or convenient, does not mean its use is justifiable.

“In this instance, deploying facial recognition technology was the most intrusive option, disproportionately interfering with the privacy of everyone who entered its stores, not just high-risk individuals,” said Commissioner Kind.

Intellectual Property

Publishers in India sued OpenAI for infringement based on the use of their data in training the LLM. This marks the first litigation on AI training and copyright infringement in Asia, while other strategic litigation goes on in the US and Europe. Our editors at Digital Governance Asia have written on the issue in Asia for Tech Policy Press recently.

[Claimants] also alleged that OpenAI stored the data, which is in breach of intellectual property rights, while using its content and data to train their Large Language Model. The [claimants] are seeking initial damages of ₹20 million ($236,910), its lawyer Sidhant Kumar said.

Finance

Bank of Japan Governor noted the financial risks of AI in recent statements.

“As financial services grow more diverse and complex, the channels of risk transmission have become less transparent, and current financial regulations may not be fully equipped to manage new types of financial services,” Ueda said.

“This environment underscores the need for operational resilience, including robust management of cybersecurity and third-party risks,” he said.

Environment

The Hinrich Foundation published a paper on the growing power and space constraints placed on the environment by increasing investment in internet connectivity and data centers to power AI. The paper emphasizes the impact on Southeast Asia in particular.

In Southeast Asia, the rollout of new internet infrastructure has double-edged impacts. Massive investments in renewable and clean energy to power data centers are coming online and can be expected to help underwrite a strengthening of the electricity grid, thereby creating spillover benefits. Geopolitical factors weighing on the locations of subsea cables could benefit the region and increase the importance of hubs like Singapore. They could also turn just as quickly into a geopolitical minefield.

Together with clean energy, water, and space, the routing of subsea cables and development of data centers underscore real-world obstacles for the digital economy. Global trade, e-commerce, and economic progress in general will all be adversely impacted if these implications are not addressed. As the point where East meets West, these effects will be felt first in Southeast Asia.

Trust, Safety and Community

South Korea’s Communication Commission investigated Telegram for distribution of deepfake pornography.

Earlier, on November 7, the KCC noted that most deepfake sexual crime materials have recently been distributed through Telegram, and requested that Telegram designate a youth protection manager and reply with the results in order to induce Telegram to strengthen its self-regulation.

Accordingly, Telegram designated a youth protection manager and notified them within two days, replied with a hotline email address for administrative communication, and responded to an email sent to confirm that the hotline email address was functioning normally with a response within four hours that they would “cooperate closely,” the KCC said.

Cybersecurity and Military

UK Minister announces at NATO conference the launch of an AI lab to fight cyber threats, in particular from China, Russia, North Korea and Iran.

Last year, we saw the United States. for the first time, publicly call out a state for using AI to aid its malicious cyber activity.

In this case, it was North Korea — who had attempted to use AI to accelerate its malware development and scan for cybersecurity gaps it could exploit.

North Korea is the first, but it won’t be the last.

So we have to stay one step ahead in this new AI arms race.

And I am pleased today to announce that we are launching a new Laboratory for AI Security Research at the University of Oxford. It is backed by £8.2 million of funding from the UK government’s Integrated Security Fund.

Microsoft Threat Intelligence released a report on North Korea’s use of AI to support illicit activities, including AI to build a repository of fake resumes and LinkedIn accounts to gain access to organizations as IT workers:

[North] Korea (DPRK) has successfully built computer network exploitation capability over the past 10 years and how threat actors have enabled North Korea to steal billions of dollars in cryptocurrency as well as target organizations associated with satellites and weapons systems. Over this period, North Korean threat actors have developed and used multiple zero-day exploits and have become experts in cryptocurrency, blockchain, and AI technology.

Multilateral

China, Microsoft and the UN’s International Labor Organization collaborated on how to use AI to foster vocational training.

On 5 November 2024, the ILO project Quality Apprenticeship and Lifelong Learning in China Phase 2 successfully organized the AI for Better TVET webinar in partnership with Microsoft, kicking off the AI-VIBES Series (AI for Vocational Instructors Boosting Education and Skills) which builds the capacity of TVET teachers and in-company trainers.

Singapore and the EU signed an Administrative Arrangement on AI safety. The arrangement covers 6 issue areas:

a. Information Exchange: Sharing expertise of information and best practices on aspects of AI safety, including relevant technologies, governance frameworks, technical tools and evaluations, and research and development, for the purpose of advancing AI safety. These may include expert information exchange with related partners, such as institutes of higher learning, think-tanks, enterprises, government agencies, or other ecosystem stakeholders.

b. Joint Testing and Evaluations: Conducting assessments of general-purpose AI models to evaluate their capabilities, limitations, and risks, including challenges arising from deployment.

c. Development of Tools and Benchmarks: Creating frameworks to evaluate capabilities, limitations, and risks associated with general-purpose AI models.

d. Standardisation Activities: For general-purpose AI.

e. AI Safety Research: Advancing joint research initiatives to explore new approaches to promote safer AI systems and manage and mitigate risks.

f. Insights on Emerging Trends: Sharing perspectives on technological developments in the field of AI to better address evolving challenges.

The G20’s (members from Asia are China, India, Indonesia, Japan, South Korea, Australia) Leadership Statement included provisions on AI:

We recognize the role of the United Nations, alongside other existing fora, in promoting international AI cooperation, including to empower sustainable development. Acknowledging growing digital divides within and between countries, we call for the promotion of inclusive international cooperation and capacity building for developing countries in this domain and welcome international initiatives to support these efforts. We reaffirm the G20 AI principles and the UNESCO Recommendation on Ethics of AI…

As AI and other technologies continue to evolve, it is also necessary to bridge digital divides, including halving the gender digital divide by 2030, prioritize the inclusion of people in vulnerable situations in the labor market, as well as ensure fairness respect for intellectual property, data protection, privacy, and security. We agree to advocate and promote responsible AI for improving education and health outcomes as well as women’s empowerment. We recognize that digital literacy and skills are essential to achieve meaningful digital inclusion. We recognize that technologies’ integration in the workplace is most successful when it incorporates the observations and feedback of workers and thus encourage enterprises to engage in social dialogue and other forms of consultation when integrating digital technologies at work.

China announced a plan to support the Global South in AI at the sidelines of the G20.

China, along with Brazil, South Africa and the African Union, were launching an "Open Science International Cooperation Initiative" designed to funnel scientific and technological innovations to the Global South.

"China supports the G20 in carrying out practical cooperation for the benefit of the Global South," [China’s leader] Xi said, according to state news agency Xinhua, adding that China's imports from developing countries are expected to top $8 trillion between now and 2030.

"China has always been a member of the 'Global South', a reliable and long-term partner of developing countries, and an activist and doer in support of global development," Xi added.

AI Safety Institutes (AISI) from the Australia, Canada, the European Union, France, Japan, Kenya, South Korea, Singapore, the United Kingdom, and the United States met in San Francisco this month for the first meeting of the international network of AISIs. The meeting resulted in four deliverables:

The International Network Members have also aligned on four priority areas of collaboration: pursuing AI safety research, developing best practices for model testing and evaluation, facilitating common approaches such as interpreting tests of advanced AI systems, and advancing global inclusion and information sharing.

$11 m USD committed to research synthetic content safety. With the rise of generative AI and the rapid development and adoption of highly capable AI models, it is now easier, faster, and less expensive than ever to create synthetic content at scale. Though there are a range of positive and innocuous uses for synthetic content, there are also risks that need to be identified, researched, and mitigated to prevent real-world harm – such as the generation and distribution of child sexual abuse material and non-consensual sexual imagery, or the facilitation of fraud and impersonation.

Australia’s national science agency, the Commonwealth Scientific and Industrial Research Organisation (CSIRO), is investing $2.2 million (AUD) ($1.42 million USD) annually in research aimed at identifying and mitigating the risks of synthetic content.

The South Korea will commit $1.8 million (USD) annually for 4 years, totaling $7.2 million (USD), toward research and development efforts on detecting, preventing, and mitigating the risks of synthetic content through the ROK AISI program.

Methodological insights on multi-lingual, international AI testing efforts from the International Network of AI Safety Institutes’ first-ever joint testing exercise.

While recognizing that the science of AI risk assessment continues to evolve and that each Network member operates within its own unique context, the International Network of AI Safety Institutes agreed to establish a shared scientific basis for risk assessments, building on six key aspects outlined by the Network – namely, that risk assessments should be (1) actionable, (2) transparent, (3) comprehensive, (4) multistakeholder, (5) iterative, and (6) reproducible. The full statement can be found here.

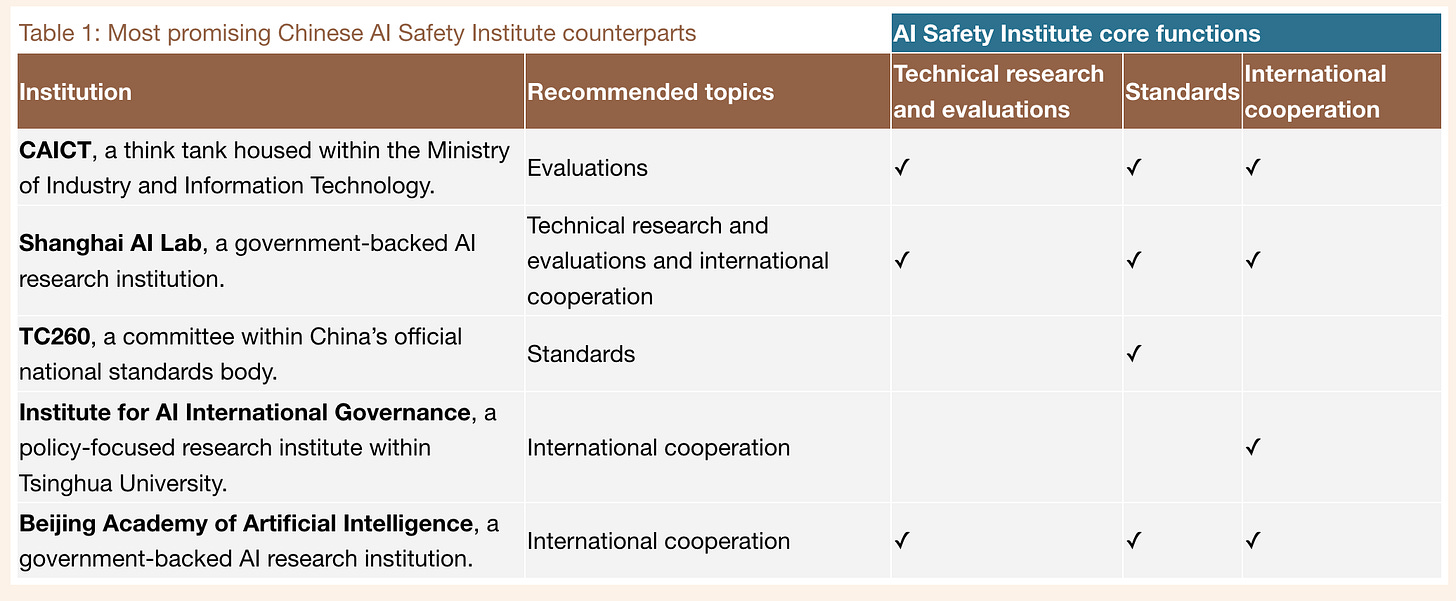

China’s absence from the International Network of AISI meeting raises important questions about whether the country has a central stakeholder dealing with AI safety. This report from the AI Institute for Policy and Strategy identifies several organizations in China that may play such a role.

The OECD (members from Asia include, Australia, Japan, South Korea, and New Zealand) published a paper on assessing potential future AI risks, benefits and policy priorities.

The Expert Group… put forth ten policy priorities to help achieve desirable AI futures:

1. establish clearer rules, including on liability, for AI harms to remove uncertainties and promote adoption;

2. consider approaches to restrict or prevent certain “red line” AI uses;

3. require or promote the disclosure of key information about some types of AI systems;

4. ensure risk management procedures are followed throughout the lifecycle of AI systems that may pose a high risk;

5. mitigate competitive race dynamics in AI development and deployment that could limit fair competition and result in harms, including through international co-operation;

6. invest in research on AI safety and trustworthiness approaches, including AI alignment, capability evaluations, interpretability, explainability and transparency;

7. facilitate educational, retraining and reskilling opportunities to help address labour market disruptions and the growing need for AI skills;

8. empower stakeholders and society to help build trust and reinforce democracy;

9. mitigate excessive power concentration;

10. take targeted actions to advance specific future AI benefits.

The WTO published a report on AI and trade. Case studies are provided by Singapore:

For example, Singapore has leveraged AI in order to continue to act as a global hub facilitating trade and connectivity. Singapore’s Changi Airport, which handled more than 59 million travellers last year, uses AI to screen and sort baggage, and to power facial recognition technology for seamless immigration clearance. The Port of Singapore, which handled cargo capacity of 39 million twenty-foot equivalent unit (TEUs) in 2023, uses AI to direct vessel traffic, map anchorage patterns, coordinate just-in-time cargo delivery, process registry documents, and more.

In the News & Analysis

Stanford’s Human-Centered AI published the AI Vibrancy Index. Half of the top 10 countries are in Asia (in order - (2)China, (4)India, (7)South Korea, (9)Japan and (10)Singapore):

Australia’s Strategic Policy Institute published an article urging the government to regulate AI to contain risks for bioterrorism.

Both generative AI, such as chatbots, and narrow AI designed for the pharmaceutical industry are on track to make it possible for many more people to develop pathogens. In one study, researchers used in reverse a pharmaceutical AI system that had been designed to find new treatments. They instead asked it to find new pathogens. It invented 40,000 potentially lethal molecules in six hours. The lead author remarked how easy this had been, suggesting someone with basic skills and access to public data could replicate the study in a weekend.

In another study, a chatbot recommended four potential pandemic pathogens, explained how they could be made from synthetic DNA ordered online and provided the names of DNA synthesis companies unlikely to screen orders. The chatbot’s safeguards didn’t prevent it from sharing dangerous knowledge.

Singapore Minister of Digital Development Teo explained the country’s interest in fostering practical AI tools at a recent industry conference:

There are many countries that would like to gain leadership in AI, for example, by making sure that they are involved in the development of the most cutting-edge, frontier models. We adopt a different approach. The approach that we want is, to put emphasis on enterprise use. We want to see many use cases being experimented upon, and it is this emphasis on having real activities, with companies and organisations benefiting from the use of AI tools

Advocacy

South Korea is conducting a survey regarding regulatory sandbox methods for data-intensive industries until 13 December.

Japan’s Fair Trade Commission opened a public comment period until 22 November on Generative AI Market Dynamics and Competition:

Given the rapidly evolving and expanding generative AI sector, the JFTC has decided to publish this discussion paper to address potential issues and solicit information and opinions from a broad audience. The topics outlined in this paper aim to contribute to future discussions without presenting any predetermined conclusions or indicating that specific problems currently exist. The JFTC seeks insights from various stakeholders, including businesses involved in different layers of generative AI markets (infrastructure, model, and application layers as described in Section 2), industry organizations, and individuals with knowledge in the generative AI field.

Sri Lanka’s National AI Strategy is open for consultation until 6 Jan 2025.