#17 Asia AI Policy Monitor

2025 Year Ahead, Korea, Japan, Taiwan AI Acts - Privacy rules on AI in South Korea and the Philippines, China AI-related patent rules, AI Advocacy opportunities in Australia, New Zealand and India.

Thanks for reading this month’s newsletter along with over 1,700 other AI policy professionals across multiple platforms to understand the latest regulations affecting the AI industry in the Asia-Pacific region.

Do not hesitate to contact our editors if we missed any news on Asia’s AI policy at seth@apacgates.com!

2025 Year Ahead

Following a busy 2024 for AI policy in Asia, we are putting forward some expectations for activity in 2025 for Asia’s AI policy.

More talk of Indigenous AI - which just means more industrial policy to get data, compute and talent onshore.

The political economy of AI’s energy gets more attention in developed Asia: Japan, Taiwan, Singapore and South Korea consider AI compute’s energy alternatives.

More AI legislation - on top of South Korea’s recent AI Basic Act - with important distinctions from the EU AI Act (no Brussels Effect).

Copyright rules around Asia are amended to be more friendly to the AI industry in terms of use of copyrighted material for data training - but expect at least one litigation arise against genAI companies on copyright infringement.

Multi-pronged enforcement efforts against deepfake pornography - or consensual intimate imagery - will occur.

Industrial policy trumps the softer side of AI governance, in terms of dollars and attention from governments across Asia.

Discussions of ethical Military AI in Asia becomes more urgent.

Privacy

Philippines National Privacy Commission issued an advisory on guidelines for use of personal data in AI systems. The advisory covers 6 areas for AI systems and personal data:

Transparency. PICs shall inform their data subjects of the nature, purpose, and extent of the processing of personal data when such processing is involved in the development or deployment of AI systems, including its training and testing.

Accountability. PICs shall be accountable for the processing of AI systems and for the outcomes and consequences of such processing when personal data is involved.

Fairness. PICs shall ensure that personal data is processed in a manner that is neither manipulative nor unduly oppressive to data subjects. As such, PICs shall implement mechanisms to identify and monitor biases in the AI systems and to limit such biases and their impact on the data subjects.

Accuracy. PICs must maintain the accuracy of personal data to ensure the fairness of the output of the AI systems.

Data Minimization. PICs shall exclude, by default, any personal data that is unlikely to improve the development or deployment of AI systems, including its training and testing.

Lawful Basis for Processing. PICs shall determine the most appropriate lawful basis under Sections 12 and 13 of the DPA prior to the processing of personal data in the development or deployment of AI systems, including its training and testing.

South Korea’s Personal Information Privacy Commission (PIPC)adopted the synthetic data creation guide. The guide aims to support the safe creation and use of synthetic data across various sectors.

The guidebook presents the steps of synthetic data creation and utilization in order to practically respond to the possibility of personal information identification: ①preliminary preparation→②synthetic data creation→③verification of safety and usability→④evaluation by the deliberation committee→⑤usage and safe management.

In addition, it provides detailed procedures for each stage of creation and utilization, such as due process related to the entity creating and utilizing synthetic data, preprocessing method of original data, and methods and indicators for verification of safety and usability. In addition, a checklist and example documents* for the entire creation process were presented to help those in charge easily understand.

South Korea’s PIPC adopted the Artificial Intelligence (AI) Privacy Risk Assessment and Management Model.

The main contents of the risk management model are as follows:

① Procedure for AI privacy risk management

AI is used in a wide variety of contexts and purposes, so the data requirements (type, form, scale, etc.) and processing methods are different. Therefore, as a starting point for determining and managing the nature of the risk, ① the specific types and use cases of AI are identified. Based on this, specific ② risks can be identified by AI type and use case, and ③ qualitative and quantitative risk measurements can be performed, such as the probability of risk occurrence, severity, priority, and acceptability. After that, ④ the risks can be systematically managed by establishing safety measures proportional to the risks.

It is desirable that such risk management be carried out from the planning and development stage of AI models and systems from a privacy-centered design (PbD) perspective for early detection and mitigation of risks, and it is recommended that it be carried out periodically and repeatedly according to environmental changes such as system advancement.

② Types of AI privacy risks

Next, we presented examples of AI risk types in the privacy context. We focused on the new infringements of information subject rights and the risks of violations of personal information protection laws due to the unique characteristics and functions of AI technology and data requirements identified through domestic and international literature research and corporate interviews.

Specifically, risks arising from the planning and development stages of AI models and systems and the service provision stages were presented according to the life cycle of AI, and the service provision stage was distinguished between generative AI and discriminatory AI, thereby adding specificity according to AI use cases and types.

③ Measures to reduce AI privacy risks

In addition, we also provided guidance on management and technical safety measures to reduce risks. However, not all mitigation measures are mandatory, and we provided guidance so that the optimal combination of safety measures can be established and implemented based on the individual context, such as the results of identifying and measuring specific risks.

④ AI Privacy Risk Management System

In the AI environment, various digital governance elements such as personal information protection, AI governance, cybersecurity, and safety/trust are interrelated. Accordingly, reorganization of traditional privacy governance is necessary, and the leading role and sense of responsibility of the Chief Privacy Officer (CPO) are emphasized. In addition, it is desirable to establish an organization that can perform a multifaceted and professional assessment of risks and establish a policy that ensures systematic risk management.

Intellectual Property

China’s National IP Administration issued guidance on AI-related patents.

1. The inventor must be a natural person

Section 4.1.2 of Chapter 1 of Part 1 of the Guidelines clearly states that “the inventor must be an individual, and the application form shall not contain an entity or collective, nor the name of artificial intelligence.”

Multilateral

India and the US agree to a strategy technology partnership, including on AI.

Building New Collaboration around AI, Advanced Computing, and Quantum

Developing a government-to-government framework for promoting reciprocal investments in AI technology and aligning protections around the diffusion of AI technology;

Strengthening cooperation around the national security applications of AI, following the U.S. government’s recent issuance of a National Security Memorandum on AI last fall, and promoting safe, secure, and trustworthy development of AI;

OECD members (including Japan, New Zealand, Australia and South Korea from Asia) issued a report on the pilot phase of the application of Hiroshima Process International Code of Conduct for organizations developing advanced AI systems. The report summarizes strengths and weaknesses in the reporting framework:

Strengths

Comprehensive coverage of AI governance topics: One of the most frequently cited strengths of the reporting framework was its comprehensive coverage of AI governance topics, ensuring that all critical areas of AI development, risk management, and compliance are addressed.

Direct alignment with the Hiroshima Code of Conduct: Another notable strength, mentioned by several organisations, is the framework’s alignment with the Hiroshima Code of Conduct, as it reflects a set of internationally recognised actions to promote safe, secure and trustworthy AI. It provides organisations with clear expectations and a trusted foundation for compliance.

Potential to become an international mechanism for AI reporting, promoting alignment across AI governance frameworks: Additionally, several respondents highlighted the framework’s potential to become an international mechanism for AI reporting, particularly in its ability to promote alignment with other AI governance reporting frameworks.

Weaknesses

Explain key terms: Relatedly, nine respondents requested explanations of key terms identified as ambiguous, such as "advanced AI systems," "unreasonable risks," and "significant incidents."

Improve alignment with other voluntary reporting mechanisms: Six organisations suggested improving alignment with other voluntary reporting mechanisms, such as the Frontier AI Safety Commitments and the White House AI Voluntary Commitments, to make filling out the report easier.

Clarify the use and sharing of responses: Six respondents expressed a need for further information regarding how the input they provide would be used and shared. Of these, four noted concerns about public disclosure of confidential or commercially sensitive information.

In the News & Analysis

The UN Policy Network on AI (PNAI) released its 2024 Report on AI Liability, Environmental and Labor Impact. Asia AI Policy Monitors editor, Seth Hays, contributed to the liability section, sharing perspectives from around Asia on AI liability from Singapore, South Korea and Japan.

Important points to consider for AI liability will need to be similar to those considered in other risky advanced technology areas such as civilian nuclear energy:

minimum amount of liability;

obligation for the operator to cover liability through insurance or other financial security;

limitation of liability in time; and

equal treatment of victims, irrespective of nationality, domicile or residence.

A Philippines government agency is building a local LLM based on local languages.

Before being released to the public, iTanong will be deployed in government agencies, where it will serve as a replacement for the Citizen’s Charter, a document in the lobby of every government office in the Philippines that details the office’s policies. While the Citizen’s Charter is only updated once a year, iTanong can be updated as needed, Peramo said.

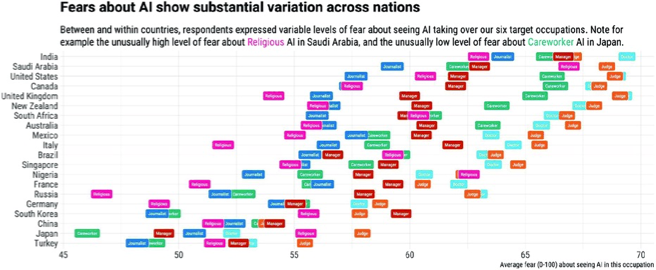

A research paper highlights the AI fears by country and professional work domain with countries in Asia highlighting both ends of the spectrum.

Country-level fears were the highest in India, Saudi Arabia, and the United States (with average fear higher than 64) and the lowest in Turkey, Japan, and China (with average fear lower than 53)…

Taiwan’s budget politics may interfere with initiatives to boost more chip innovation and AI projects.

Taiwan's Ministry of Science and Technology estimate comes after the Ministry of Economic Affairs on Tuesday warned that the country's collaboration with tech companies, such as Micron (MU.O), opens new tab, AMD (AMD.O), opens new tab and Nvidia (NVDA.O), opens new tab, could be affected and that insufficient future budgets would affect Taiwan’s international AI technology partnerships.

The economic ministry’s spending for next year is projected to be reduced by T$29.7 billion, with T$11.6 billion earmarked for cuts to technology projects, according to its calculations.

This piece reflects on Southeast Asia’s need for comprehensive AI governance structures in 2025.

The region’s AI governance initiatives, from establishing a national strategy to creating an AI governance framework, are primarily led by a small number of countries with more advanced digital economic systems. Singapore has launched 25 governance initiatives, including the National AI Strategy and Model AI Governance Framework. Vietnam, Thailand and Indonesia have all launched six, five and one initiatives, respectively. Several other countries are in the initial stages of developing AI, such as Cambodia, which in 2023 issued detailed recommendations on how the government should facilitate…

Implementing strategic AI industrial policy in the region is critical to ensure that the significant economic impact of AI can be equally harnessed by the member states. Industrialising AI is the key to increasing competitive advantage in AI. This can be done by strengthening governments’ investments in AI initiatives while enhancing public and private partnerships to support the region’s AI manufacturing. Other major Asian AI developers such as China, Taiwan, Japan and India have improved the production of graphics-processing units and the type of chips used to train large language models.

Experts in Japan call for regulation of deepfake - as the country is 3rd in the world in terms of genAI image production.

The increasing popularity of such websites has raised significant concerns due to the harmful content being created. These platforms allow users to upload images and generate fake explicit content, contributing to the spread of deepfakes, especially on social media.

Experts have called for the creation of rules and regulations to combat the misuse of deepfake technology. Ichiro Sato, a professor at Japan's National Institute of Informatics, emphasized the importance of passing laws and improving information literacy to mitigate the negative impact of deepfakes, especially given the damage they cause to people's privacy and reputations.

Trust & Safety

The Indian city of Lucknow spent 100 Crore on AI surveillance cameras with mixed results.

And yet, the logic of surveillance seems irrefutable: if someone is watching, people behave better. We install cameras outside our homes, in shops, in schools. When my own cat went missing, I instinctively requested access to ten CCTV feeds to quell my worries. The belief in watching as deterrence runs so deep that it feels like common sense. Who wants to be the person who said "no" to a system that might have prevented an assault?

That's the trap: technology presents itself as the solution to an urgent problem—in this case, women's safety. The trade-offs—normalised monitoring and loss of privacy—become necessary compromises. Those questioning surveillance must prove it won't prevent harm—an impossible task—while those promoting it need only gesture at potential benefits.

Legislation

Japan’s Prime Minister attended the AI Strategy Council and called for legislation to be developed and submitted to the Diet. The Prime Minister said:

…AI also entails various risks. These must be addressed. In line with the interim summary that the chair explained, I would like Minister Kinai to work with Minister Taira and other relevant cabinet ministers to take steps to quickly submit to the Diet a new bill that balances the acceleration of AI innovation with risk responses. In order to strengthen the government's command center function for AI policy, we will establish an AI Strategy Headquarters composed of all cabinet ministers.

Guidelines for the procurement and use of AI will be developed. Each ministry and local government will grasp the actual situation of AI introduction in infrastructure and other areas, and will proceed with measures such as reviewing the guidelines. We will develop guidelines in accordance with the Hiroshima AI Process and encourage private businesses to comply with them, and will take necessary measures such as investigating malicious cases and collecting information from AI developers.

Taiwan’s AI Basic Act is set for a 2025 legislative debate.

Three to four versions of the AI Basic Act are currently under review in Congress, awaiting alignment with the executive branch's draft. However, significant differences between versions and the limited legislative timeframe due to budget discussions make it unlikely that the Act will pass by Lunar New Year in early 2025.

NSTC Minister Cheng-wen Wu initially announced plans to submit the AI Basic Act draft to the executive branch by October's end, with the goal of reaching Congress for review soon after. However, the draft remains in legal processing, delaying its submission. The NSTC has opted to wait for international regulatory standards, aiming to align Taiwan's framework with global guidelines.

During the draft's public review period, several provisions encountered opposition from civil organizations. The NSTC must also assess potential conflicts with existing regulations and finalize which body—the NSTC or the MODA—will assume oversight. Clear and precise language in the draft is crucial to avoid future legal ambiguities, contributing to delays in advancing the AI Basic Act beyond its initial timeline.

South Korea’s parliament passed the AI Basic Act, set for implementation in Jan 2026. Some summaries of the act and comparative analysis to the EU AI Act are useful to understand the direction and intent of the Act.

When comparing the EU’s AI Act and Korea’s AI Act bill, we see a clear thematic convergence:

Both identify certain areas where AI poses heightened risks (EU Articles 6, Annex III vs. Korean Article 2(4)).

Both mandate transparency and user awareness (EU Article 52 vs. Korean Article 31).

Both require robust risk management, documentation, and oversight for high-risk/high-impact AI (EU Articles 9-15 vs. Korean Articles 32, 34).

Both are founded on principles of human rights, dignity, and trust, although the EU relies on existing EU values and Korea explicitly calls for AI ethics guidelines (EU Recitals and cross-references vs. Korean Articles 3, 27).

Both establish oversight bodies and enforcement mechanisms (EU Articles 56-58 vs. Korean Articles 7, 11, 12, and enforcement in 40-43).

Advocacy

New Zealand’s Privacy Commissioner issued public consultation on its draft Biometric Processing Privacy Code of Practice. The Code includes 12 prospective rules until 14 March.

India’s MeitY issued a public consultation until 27 January on draft AI Governance and Guidelines Development.

The Subcommittee’s report highlights the importance of a coordinated, whole-of-government approach to enforce compliance and ensure effective governance as India's AI ecosystem evolves. Its recommendations, based on extensive deliberations and a review of the current legal and regulatory context, aim to foster AI-driven innovation while safeguarding public interests.

Australia’s Treasury opened a public comment period until February 15 on digital competition.

This proposal paper seeks information and views to inform policy development on a proposed new digital competition regime with upfront rules to promote effective competition in digital platform markets by addressing anti-competitive conduct and conduct that creates barriers to entry or exploits the market power of certain digital platforms

The Asia AI Policy Monitor is the monthly newsletter for Digital Governance Asia, a non-profit organization with staff in Taipei and Seattle. If you are interested in contributing news, analysis, or participating in advocacy to promote Asia’s rights-promoting innovation in AI, please reach out to our secretariat staff at APAC GATES or Seth Hays at seth@apacgates.com.